Neuroscience and artificial intelligence have a symbiotic relationship. That makes sense since the goal of artificial intelligence is to develop computer systems that require human intelligence.

The current state-of-the-art artificial intelligence methods are based on simple neuroscience models from the 1970s. These models are called neural networks, and they use very simple models of neurons and build connections between them using a powerful mathematical technique that many researchers don’t believe exists in the brain. Using these neural networks, computer scientists are able to automatically or semi-automatically perform many previously difficult tasks, like image and speech recognition.

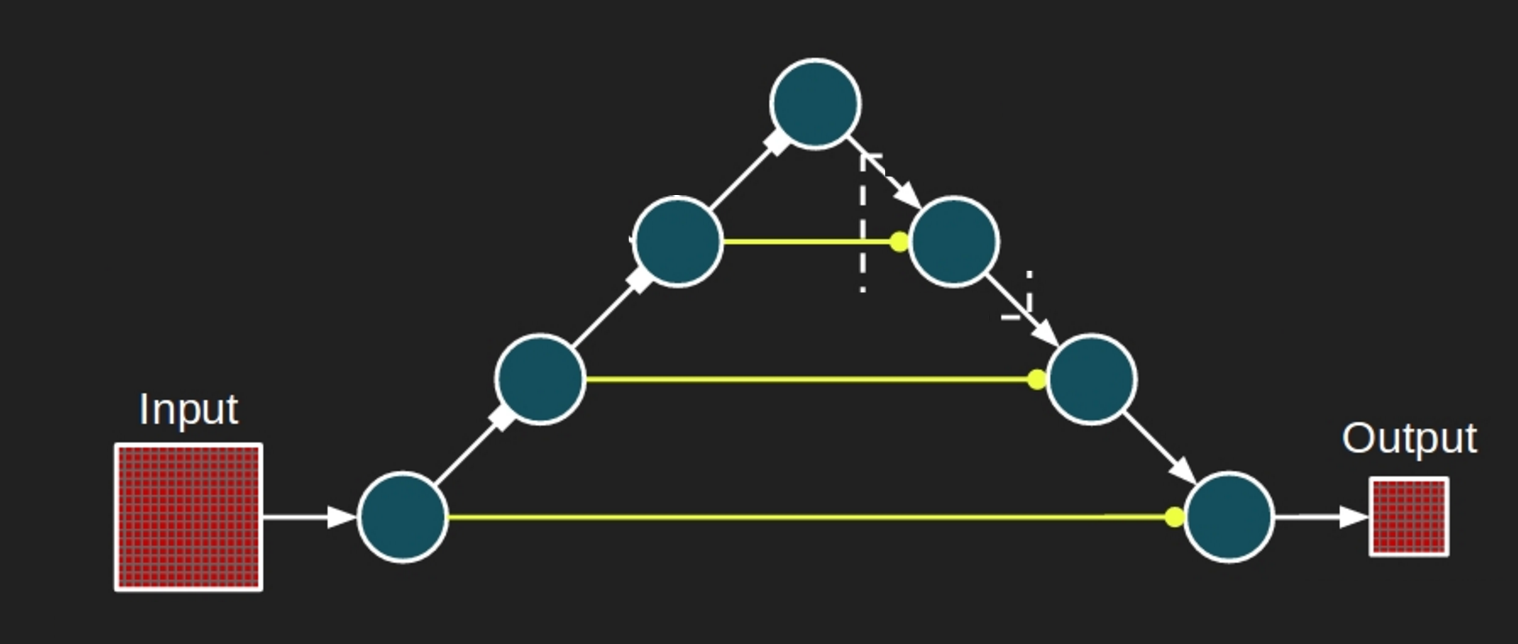

These models can be very complicated, though some aspects are also very easy to understand. Here, we’ve displayed a popular pattern for connecting model neurons together. Generally, these are called model architectures, and this is one we use a lot. The input, usually an EM image, is fed into the left half of the diagram, and information flows through the connections to the output on the other side. Each circle in the diagram represents many different model neurons wired together into a module. You can think of this as one step of processing the input to the output. In addition to these modules, there are connections between them which go up, down, and to the right. The “up” connections are similar to zooming out, so that the model can “see” more context at a lower level of detail. The “down” connections are similar to zooming back in, and the rightwards connections stay at the same level of detail. By having all three types of connections within our models, we allow it to combine information from different levels of detail together to make decisions about parts of an image.

Now artificial intelligence is helping neuroscientists come up with even more advanced models. Most EM labs use convolutional neural networks to help reconstruct the branches of the neurons in images, identify synapses between the cells, and assign labels to what is seen in the image, like marking an object as an axon, dendrite, cell body, glia, or blood vessel, or pointing out where there are mitochondria.

The hope is that neuroscience can help artificial intelligence in areas where it’s still struggling. The simple neural networks that people have developed are still far from human-level intelligence in many ways. Most artificial intelligence systems are more like savants than intelligent in the sense that we usually think of it: they excel at a specific task but anything far outside their expected parameters does not compute. Some believe that neuroscience will help guide changes to AI neural networks so that they can learn with only a few examples instead of the thousands to millions they currently require and that they can easily identify similarities and differences between objects that the network has never seen before.

That’s the goal of the iARPA MICrONs project: to refine our simple artificial neural networks by studying the real neural networks of a brain.

We don’t know. It’s science! But one of the more seductive hypotheses in neuroscience is that there is a basic circuit of intelligence that’s just replicated all over the brain. If researchers could only figure out what that basic circuit is, how it’s wired, and how it wires together with other basic circuits, then we’ve cracked intelligence.

It’s unlikely that it will be that simple, but there are a few tantalizing observations:

Could it be that these columns, or cortical columns, are a basic computational unit of intelligence? It’s possible. Regardless, cortical columns are definitely an important object to study.

Cortical columns have attracted a lot of research in neuroscience, including the famous Blue Brain Project in Europe, but no one has been able to definitively map all the connections between neurons in one column.

That’s the primary target for the teams in iARPA’s MICrONs project.